Software Architecture for ETLs

Hexagonal, MVC, Layers, custom, ... I tend to keep it simple

I’ve recently started to work with a team on which we will refactor a big script into a scalable and maintainable application. The current script fits very well in the category Extract, Transform, and Load (ETL from now on).

Based on that, and taking into account our use case, we decided to implement an AWS Serverless approach, more concretely, using an AWS Step Function.

In today’s email, I want to share with you what the Software Architecture I tend to use in this kind of application is, so that you can apply it as well.

Before continuing, if you like my work and you want to support it, I would appreciate it if you could share this newsletter with your peers via:

Or just forward this email. Thanks in advance!

Getting back to our business…

If you read me for a while, you know that I like to reduce complexity as much as possible.

👉🏼 Making things simple is complex.

Input, Output, and Use Case

As simple as it sounds.

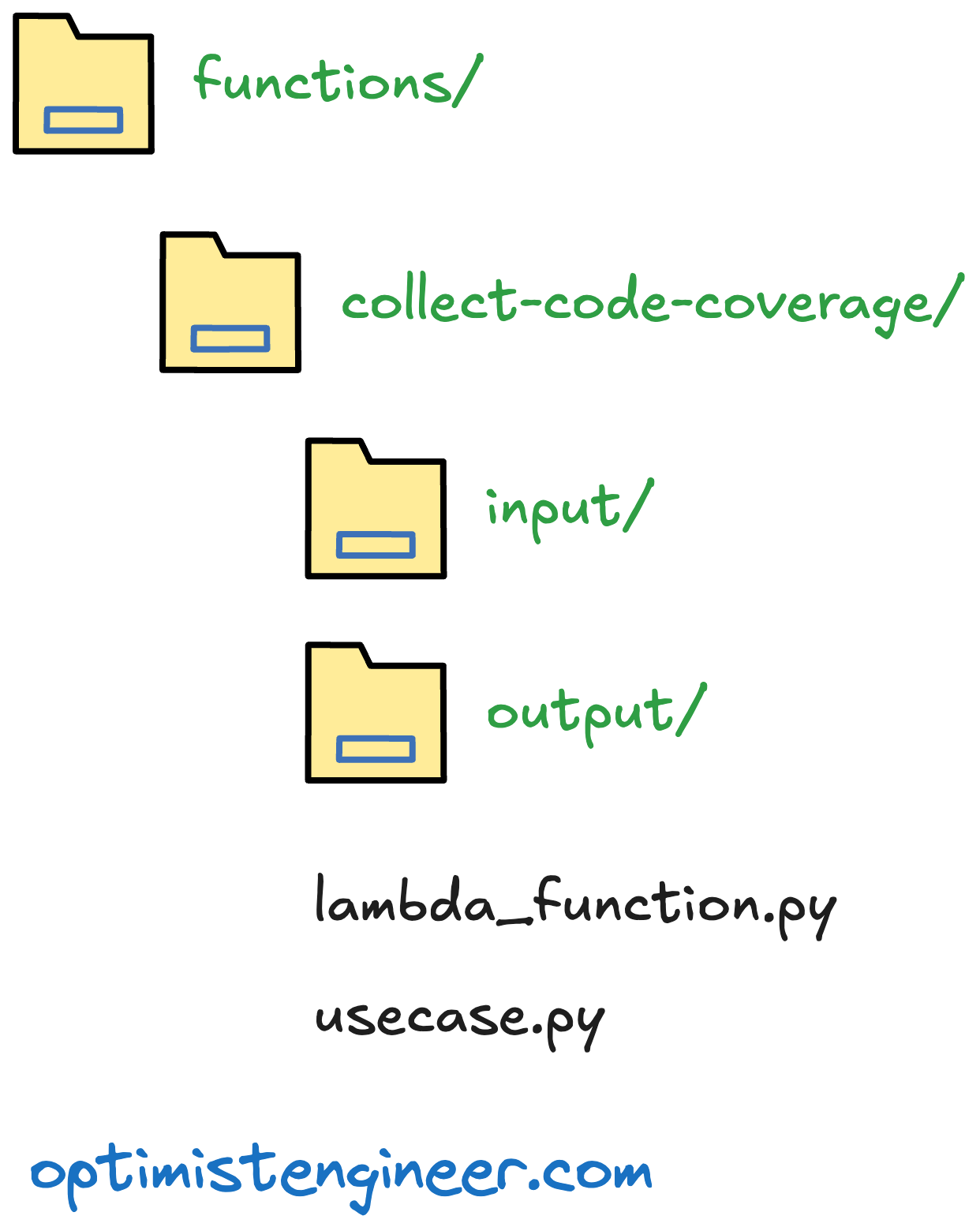

For each of the lambda functions within a Step Function, the Software Architecture that works well for me is:

input. In this package, you will implement the code for reading from the outside world. For example, if your app has to download a configuration file from an S3 bucket, call a REST API endpoint, etc., you will implement it here. Within this

inputpackage, I would advise having a sub-package per technology from which your app will read, so you encapsulate each.output. In this package, you will implement the code for pushing data outside of your app. For example, if your app needs, after your business logic, to write a file into an S3 bucket, this is the right place. Same as for

input, I would advise having a sub-package per technology from which your app will write, so you encapsulate each.Use Case. Assuming you have a single use case (since this is an ETL), you can resolve your “orchestration” from one single use case, which uses the different inputs, executes the business logic, and uses the outputs.

A question that I recently got about this Software Architecture was the following:

What happens if, from one of the APIs I use in the

inputpackage, I need another dataset? Could I just implement a new function in theinputpackage for that and leverage the existing code?

☝🏼 That means a new domain. It does not matter if you read data from one or many APIs, but the domain matters.

For example:

If you are gathering information about Observability, it does not matter if you gather that from Datadog or New Relic; what matters is the concept of Observability (the domain) for you.

If you are gathering information about Applications, it does not matter if you gather that information from Datadog as well; what matters is the concept of Applications (the domain) for you.

Another example:

If you are gathering information about Bugs, it does not matter if you gather that information from Jira or others; what matters is the concept of Bugs (the domain) for you.

If you are gathering information about Tests executions, it does not matter if you gather that information from Jira as well; what matters is the concept of Tests executions (the domain) for you.

Then, you need to implement a new Lambda Function (in the context of the Step Function) that resolves the ETL for each of the domains.

If you still want to reuse code (which I would recommend), when using Step Functions, you can leverage AWS Lambda layers. They allow you to share common code across multiple Lambda functions within the same workflow.

As you can see, this is not exactly a hexagonal architecture, but an adaptation from it.

I would love to hear from you and about Software Architecture in general, and for ETLs in particular. Write down a comment, I'll read you.

Cheers!